Xargs in Linux with bash -c and create a group of commands.

Xargs in Linux is a command employed in a UNIX shell to transform input from regular input into a command’s arguments.

In other terms, through xargs, the output of a command is adopted as the input of another command.

The idea above is the main and basic understanding of this amazing command on Linux. Before we go to the advanced techniques, let’s make a better introduction about xargs.

Why xargs is used in Linux

The xargs are used on Linux to help us work with more than one operation/command on single line/command without writing a complex function, making our life easier.

To understand better why we use xargs in Linux, imagine three operations in this sequence: List, Filter (Optional), Action.

Last break down the three operations:

List: It could be the result of any command that shows us the result as a List. For example, when you perform the command ls -l or find, in a specific directory, the output it’s a list of all files and sub-directories.

Filter: You can filter the Lits to get the only items that you would like to apply a specific operation/action. For example, using the grep command. So, this step it’s not required. It’s up to you to filter or not, or if your scenario requires you to do it. Also, we can extract values from items with the awk** command.

Operation: The Operation could be any action that we would like to do for each item in the List. So, in other words, it could be any command that you would like to execute using the items from that List as parameters for the Operation. For example, we have a couple of images in our directory that we listed them using the find command, and we can filter the List by applying a Regex on the file name. And then execute the rm command to delete them.

Now, let’s see the hard way to delete these images and then see the more straightforward approach (using xargs).

First, the most challenging way will depend on how many files you need to delete (or any other operations against those files). It would be 100% manually, so we need to go to the folder and delete it one by one on Terminal.

Second, we can build a Shell Script using a simple FOR loop and iterate over the files in the directory and delete the files that we want. Like this:

#!/bin/bash

# filter and get only image files with extension .png

FILES="./*.png"

for i in $FILES

do

echo "Deleting $f file..."

# Execute take action on each image. $i store the current image file name

rm -f "$f"

done

So, we have seven lines in this Shell Script to delete the files that we want.

Here’s the truth, the FOR loop above it’s not complex, but it’s not a good deal to make a Shell Script for that proposal only, and because you will see that it’s not worth it.

Finally, let’s see how we can achieve the same goal using xargs:

find . -name "*.png" | xargs rm

Which approach it’s the easier one? Of course, xargs it’s the easier one.

Looking deep at the xargs command above, you can see and confirm its approach, how it works, and why xargs is used in Linux.

But wait – there’s more.

And if you still need to filter also by file name:

find . -name "bitslovers-*.png" | xargs rm

Sounds good, right?

xargs vs. for loop

Now for another part of the story.

You were probably wondering. When should I NOT use xargs? Or When Should I use FOR loop?

Here’s the thing:

There are some cases where it will not be possible to use the xargs.

For example:

1 – If you need to execute more than one Operation for the same item (file) from List.

2 – If you need to apply any conditions before acting. For example, a prerequisite to deciding if it should perform or not the Operation.

In those specific scenarios, a Shell Script with FOR loop may be necessary.

Xargs multiple commands

You have noticed that xargs also link multiple commands. That is possible because we use the Pipe. So in the example above, we have two operations/commands: find and rm.

However, you can use even more multiple commands with xargs. Again, there is no limitation, and you can see in the example below that we have three commands together: find, grep, and rm.

How to use xargs with find and grep

Using grep xargs example

find . -name "*.png" | grep "bitslovers-" | xargs rm

Let’s see another example:

Uses xargs to kill multiple processes

ps aux | grep java | awk '{print $2}' | xargs kill -p

The command below finds all processes running on your computer and filter, by selecting all process has “java” word on it and kill them.

Also, in this example above, you could see that it’s possible to execute a filter on the List multiple times by linking the filters by Pipe. The second filter, the command awk, is extracting the process ID to send it to our kill command as a parameter.

How to use Xargs

So, if you don’t know how to use Xargs or don’t know if you can use it in your scenario, remember the concept you just learned above: List, Filter (optional), and Action. Then, if your plan matches this logical sequence, you can use it for sure.

The best way to learn xargs, it’s trying to use it. And also analyze some examples of all possibilities, where we can apply and use them. You can check here a list of examples of xargs.

It’s time to begin the advanced topic!

How does Xargs in Linux command work?

In layman’s terms, the instrument – in its most basic form – reads data from regular input (stdin) and performs the command (provided to it as an argument) one or more times based on the input read. Any gaps and spaces in the input are handled as delimiters, while empty lines are ignored.

Now let’s concentrate on a particular problem. What to do when you run into traditional pipe-based stacking barriers, or common (; delimited) accumulating of Linux commands, and when even using xargs does not directly seem to answer?

This case when writing one-liner scripts at the command line and when processing complex data or data structures. This post developed the information presented here on research and many years of practice using this particular method and approach.

Pipes and xargs

In my experience. Anyone learning Bash will improve in their command-line scripting skills across time. The great thing with Bash is that any skills acquired at the command line quickly turn into Bash scripts. The syntax is as good as indistinguishable.

And, while skills improve, newer engineers will usually find the Pipe language first. Using a pipe is simple and straightforward: the past command’s output is ‘piped’ to the following command input. Imagine it like a water pipe bringing water from an output source to an input source elsewhere, such as water from a dam piped to a water turbine.

Let’s look at an easy example:

echo 'a' | sed 's/a/b/'

Sometimes, you will realize that pipes still have restricted capabilities, particularly when one wants to pre-process data into a format ready for the following tool.

Let me explain why.

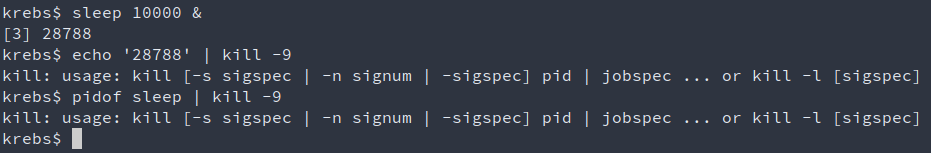

For example, consider this condition:

Here we begin asleep in the background. Next, we execute pidof to get the PID of the sleep command executed and try to kill it with kill -9 (Remember of -9 as a disruptive mode to kill a process).

Based on the results: It fails. We then try to use the PID implemented by the shell when we started the background process, but this then fails.

How applying xargs in Linux to make this better

The dilemma is that kill does not allow the input directly, whether from ps or even from a mere echo. To rectify this problem, we can utilize xargs to take the output from either the ps or the echo command) and implement them as input to kill by giving them arguments to the kill command. It is so as if we executed kill -9 any_pid immediately.

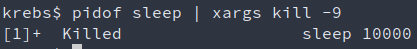

Here’s the deal. Let’s see how this goes:

sleep 10000 &

pidof sleep | xargs kill -9

Sounds good, right?

This approach works well and delivers whatever we set out to do: kill the sleep process. One slight difference to code (i.e., add xargs in front of the command), yet one significant change to wherewith proper Bash can be for the developing engineer!

Still not convinced? About the power of the xargs? And it doesn’t stop there.

Let’s deep dive into a complex scenario, but easier to apply xargs

We can also use the -I option (establishing the argument replace string) to kill to make it a few more evident in how we are passing arguments to kill: i12b Here, we define {} as a replacement string. In other words, whenever xargs will see {}, it will substitute {} to whatever input it got from the last command.

Still, even this has its shortcomings. How about if we wanted to present some excellent debug information printed inline and from inside the statement? It looks impossible thus far.

We could post-process the output with a sed regex or insert a subshell ($()). Nevertheless, all these still seem to have restrictions, especially when building complicated data streams without using a new command without using in-between temporary files.

What if we could – once and for all – move these limitations behind and be 100% free to create any Bash command line we like, only using pipes, xargs, and the Bash shell, without temporary in-between files and without beginning a new command? It’s reasonable.

Let me explain how. Let’s jump right in.

Embrace to xargs with bash -c

As we have observed, when the pipes with xargs, one will still run into constraints for slightly more senior engineer-level scripting. Let’s take our previous model and introduce some debugging information without post-processing the result. Usually, this would be not easy to perform, but not so with xargs joined with bash -c:

sleep 1000 &

pidof sleep | xargs -I{} echo "echo 'The PID of from sleep process was: {}'; kill -9 {}; echo 'The PID {} has now been terminated'" | xargs -I{} bash -c "{}"

Based on the results:

Here we applied two xargs commands. The first one builds a custom command line, utilizing the output of the previous command in the pipe (being pidof sleep), and the second xargs command does that produced, custom-per-input (important!) command.

Why custom-per-input? The xargs will prepare each line through its input and execute whatever has been passed to perform for each input line.

What you should memorize

There is a lot of potential here. So indicates you can create and build any custom command and, consequently, execute it, fully free of whatever format the input data is in and fully free to suffer about executing it. The only syntax you have to memorize is this:

any_command | xargs -I{} echo "echo '...{}...'; any_commands; any_commands_with_or_without{}" | xargs -I{} bash -c "{}"

See that the nested echo (the second echo) is only essential if you need to re-output the exact text. Oppositely, if the second echo were not there, the first echo would start to output ‘The PID …’ etc. The bash -c subshell would be incapable of parsing this as a command (‘The PID …’ is not a command and cannot be performed as such, forward the secondary/nested echo).

Once you memorize the bash -c, the -I{} and the approach to echo from inside another echo (and one could alternatively use escape series if needed), you will see yourself doing this syntax over and over again.

pretty easy, right? And it doesn’t stop there.

Xargs in Linux, when used with option c, unlock more possibilities

Let’s assume that you have to perform three actions per file in the directory:

1) output the file’s contents,

2) migrate it to a subdirectory,

3) delete it.

Usually, this would need several steps with different staged commands, and if it gets more difficult, you may also need temporary files. But it is very smoothly done using bash -c and xargs.

I’ll show you:

echo 'BITSLOVERS' > file1

echo 'The best blog' > files2

echo 'Ever' > file3

mkdir backup

ls --color=never | grep -v backup | xargs -I{} echo "cat {}; mv {} backup; rm backup /{}" | xargs -I{} bash -c "{}"

Firstly, it is always an excellent approach to use –color=never for ls, to limit issues on the system that apply color coding for directory listing outputs (on by default in Ubuntu). Usually causes substantial parsing problems due to the color codes being sent to the terminal and prefixed to directory listing entries.

We first generate three files, file1, file2, and file3, and a subdirectory named sub dir. We eliminate this sub dir from the directory listing with grep -v after noticing that a quick test run (without executing the commands or without the second xargs) the sub dir still shows.

It is always essential to test your composite commands first by examining the output before passing them to a bash subshell with bash -c for execution.

Lastly, we use the same method as seen earlier to make or command; cat (show) the file, pass the file (indicated by {}) to the sub dir, and eventually remove the file inside the sub dir. We understand that the contents of the three files (1, 2, 3) on screen, and if we examine the current directory, our files are gone. We can also see how there are no more files in the sub-directory. All went well.

Conclusion

Using xargs in Linux is an excellent skill for an advanced Linux (and in this example) Bash user. Also, with xargs in combination with bash -c brings far greater power, the unique ability to build 100% free custom and complex command lines without intermediary files or constructs without the necessity to have piled/sequenced commands.

And it doesn’t stop there.

We also can combine xargs with many others commands, like find, cp, and tar, to manage files. For more specific information about each xargs option, see the xargs man page or type man xargs on your terminal. If you have any issues or feedback, consider free to comment.

Thanks, Bits Lovers!

![Custom Keyboard Shortcut Linux [Example: Generate AWS MFA Tokens]](/wp-content/uploads/sites/5/2022/02/20220201_043310_0000.png)

Comments