Autoscaling GitLab CI on AWS Fargate

New technologies on Could Computing are impacting positively on Software development. But, at the same time, we need more powerful hardware to build complex applications and increase our productivity. In addition, when we are talking about scaling our process to release applications, sometimes it’s tricky to design all steps.

Gitlab helps us make our process and design our workflow to develop and release our application. Also, provide different approaches that should achieve our goal most efficiently.

To provide a good experience, Gitlab decouples the build process from the Gitlab server. So, they developed a Runner, another server that builds our projects behind the scene, and it’s called by Gitlab each time we need to perform any build or release process.

Today we will talk about how to scale the build process and, at the same time to save money.

It looks great, right?

What is the AWS Fargate?

See this short video that quickly explains the AWS Fargate:

When use Autoscaling GitLab CI on AWS Fargate

But, why scale the build process? For medium and large companies with a considerable number of developers and applications, it’s common to overload the Gitlab with several build processes simultaneously, with Automate Test, or compilation process, for example, nodejs, c++ or Java, that requires a lot of resources. Especially the QA team that executes an Automate test using Cypress with a headless browser with multi-threads running in parallel, this kind of process affects the experience of other developers and slows down when everybody starts trying to build your application.

Also, not just building an application is the goal for the one pipeline. We also can have projects that perform deployments in Development, Testing, or Production environment. So, it’s a lot of activity for just one Runner, for an extensive Development and DevOps team.

The GitLab is not affected by the number of pipelines running simultaneously because all processes happen on the Runner. So, we only need to scale our Runner.

Autoscaling Gitlab Runner on AWS: Alternatives

There are several ways to create a Runner and register it on Gitlaba and then build your application.

Also, there are two different approaches to lunch the Runner using AWS Fargate.

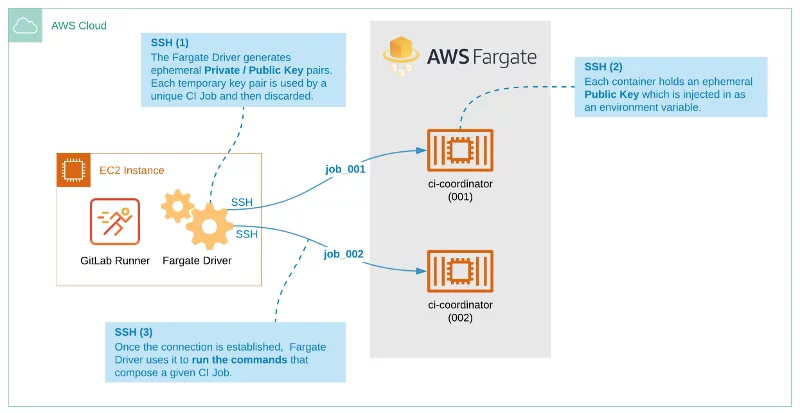

This approach uses 100% AWS Fargate, but we still need a Runner server using a regular EC2 instance with a Fargate Driver.

When you execute a commit, Gitlab will request the Runner to start the pipeline, and this will create a process to create a Task on Fargate and create a Container to perform your Task on it. All communication between the Runner and Executor is through the SSH.

Gitlab Runner on AWS Fargate: Important Notes

This solution has a hard limitation that does not support building docker-in-docker. If you don’t care about this functionality, maybe this solution is good for you. But, this post will use a different and much better solution. But we have a better solution. Let’s continue!

The Best Solution: Autoscaling GitLab CI on AWS Fargate

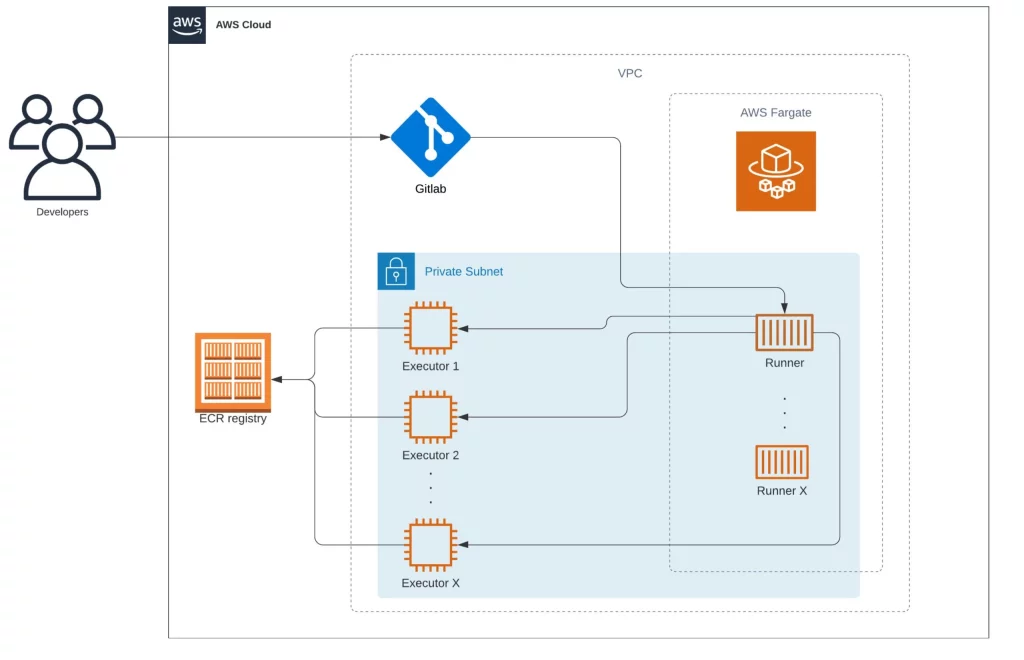

The solution below will use the Fargate too, but in this case, the Runner will be the serverless part of the solution. It means that we don’t need to provision an EC2 instance. But we still need to perform the registration on Gitlab.

The best part of this solution is that it does support the docker-in-docker build!

This solution creates EC2 (The Executors) on-demand when you trigger a pipeline process on Gitlab.

Let’s me explain how this workflow works:

And before explaining, the resources that are part of this solution are:

- Gitlab

- One ECS Cluster

- One Docker Imager (the Runner) running on Fargate

- An Executor (one or more EC2 created on demand by Fargate-Runner)

So, all those four parts talk to which other on this workflow:

First, the Runner’s, a serverless service running on the cloud, waits for tasks from Gilab, then later creates new Executors to process the job.

First, you have configured a “Project B” to build one application. Then, when we trigger the build process, Gitlab tries to figure out which Runner we need to use. Later, Gitlab makes an API request to contact the Runner.

In our solution, the Runner, it’s not a server. Instead, it’s a serverless service that keeps running forever and waiting to receive a Job from Gitlab. This Runner follows the same process to register on Gitlab as any usual Runner.

Also, another difference from this serverless Runner is that the build doesn’t happen on it. Instead, the serverless Runner creates an “Executor” server, launched only to perform a single Job. Finally, it’s worth remembering that this approach is still secure because all processes are still well isolated from other build processes.

At the end of the build process, the executor server will be destroyed by the Runner.

Cloud Formation for Autoscaling GitLab CI on AWS Fargate

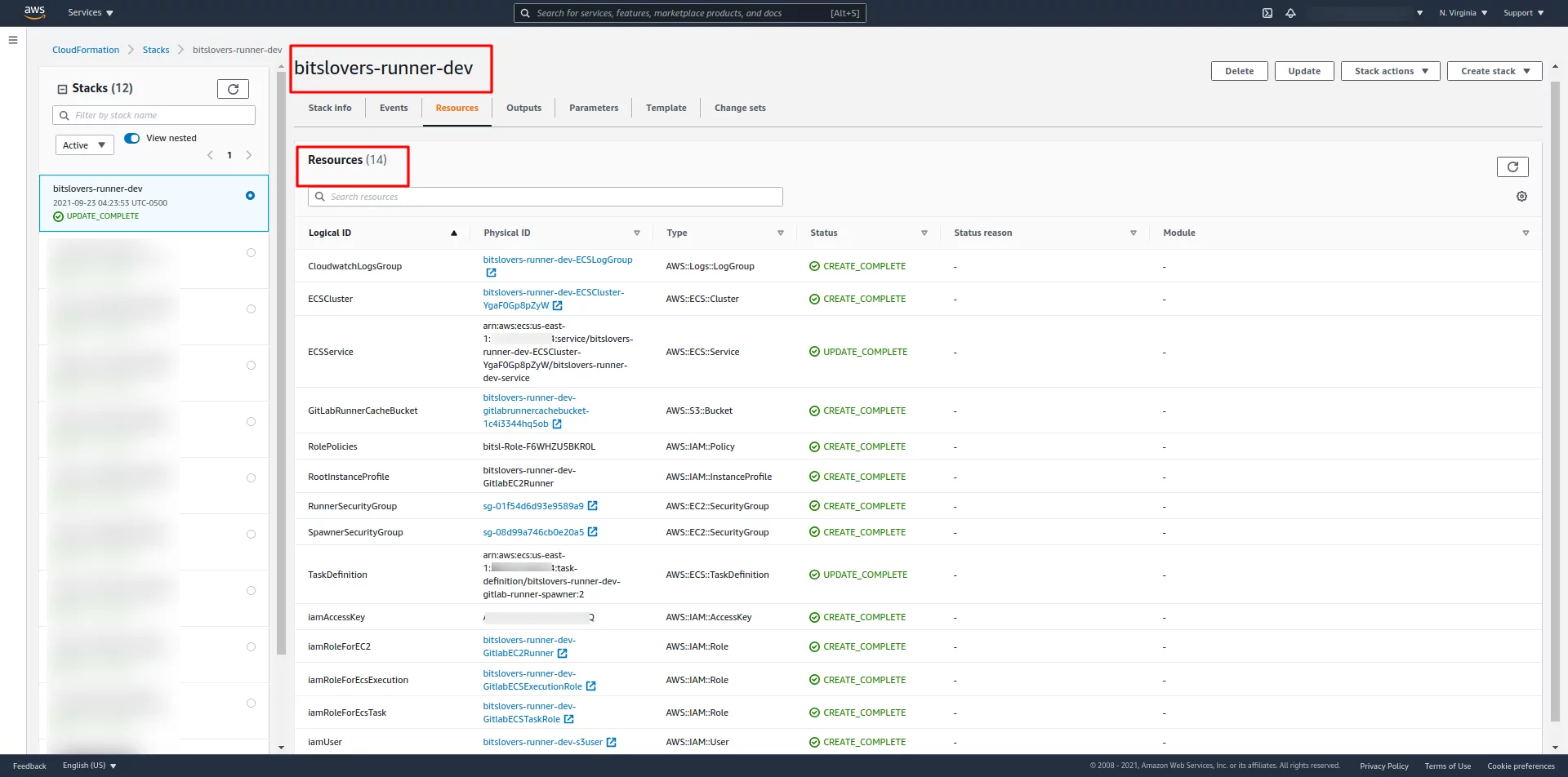

I have created a CloudFormation to generate the ECS Cluster and the Fargate Task to simplify deploying the Autoscaling GitLab CI on AWS Fargate.

This Cloud Formation template is available in my GitHub account. This template will create the Gitlab Runner.

The diagram below shows all resources and the relationship between them:

Deploying Autoscaling GitLab CI on AWS Fargate using Cloud Formation

There are several ways to execute this Cloud Formation template:

You can do it using the AWS command-line tool, or you can use the file through the AWS console, and on this process, the AWS will upload the template automatically on a temporary S3 Bucket.

Using Gitlab to deploy a Cloud Formation template:

In my case, I have one project on Gitlab that has a pipeline configured that automatically executes the Cloud Formation template. This approach makes it easier for anyone from your team to run it when it is needed. But it’s up to you to decide which method is the best for you.

The GitHub repository above has one example of a .gitlab-ci.yml file that uses a Docker image (there is also a Dockerfile) to execute the Cloud Formation template and the required commands then to create the resources. In the last command, we replace and specify the values for Cloud Formation using the “–parameter-overrides” and also according to variables present on the .gitlab-ci.yml.

variables:

AWS_REGION: us-east-1

GIT_SSL_NO_VERIFY: "true"

MODE: BUILD

STACK_NAME: 'bitslovers-runner-dev'

VPC_IP: '' #VpcId

SUBNET: '' #SubnetId

GITLAB_RUL: 'http://YOUR_GITLAB.COM' #GitLabURL

GITLAB_TOKEN: '' #GitLabRegistrationToken

RUNNER_TAG: 'aws-fargate-dev' #RunnerTagList

DOCKER_IMAGE_DEFAULT: 'alpine:latest' #DockerImageDefault

DOCKER_IMAGE_RUNNER: 'XXXXXXXX.dkr.ecr.us-east-1.amazonaws.com/ec2-gitlab-runners-fargate:dev' #DockerImageRunner

AWS_SUBNET_ZONE: 'a' #SubnetZoneEC2Runner

stages:

- prep

- deploy

Prep:

image: docker:latest

stage: prep

script:

- docker build -t build-container .

Deploy:

image:

name: build-container:latest

entrypoint: [""]

stage: deploy

script:

- aws configure set region ${AWS_REGION}

- sam deploy --template-file template.yml --stack-name $STACK_NAME --capabilities CAPABILITY_NAMED_IAM --region us-east-1 --parameter-overrides VpcId=\"${VPC_IP}\" SubnetId=\"${SUBNET}\" SubnetZoneEC2Runner=\"${AWS_SUBNET_ZONE}\" GitLabURL=\"${GITLAB_RUL}\" GitLabRegistrationToken=\"${GITLAB_TOKEN}\" RunnerTagList=\"${RUNNER_TAG}\" DockerImageRunner=\"${DOCKER_IMAGE_RUNNER}\" RunnerIamRole=\"${RUNNER_IAM_PROFILE}\"

Before Create the Cloud Formation Stack

Regardless of which approach you have chosen, you must change and specify some parameters for the Cloud Formation template according to your environment. Like, your VPC ID, Subnet ID, Stack name, Gitlab URL, GitLab Token (for registration), Tag for the Runner and, Docker Images. (See below the Dockerfile as an example, you need a Docker Image before execute the Cloud Formation)

Let me give you an overview of some of those variables:

DOCKER_IMAGE_DEFAULT -> It’s the default Docker Image that the Executer server will use and load to execute your pipeline when you didn’t have specified on your .gitlab-ci.yml.

DOCKER_IMAGE_RUNNER -> This variable specifies the Docker Image that will load on the Fargate Task. Here you can use an image from a public or private registry. This solution supports ECR private images.

GITLAB_TOKEN -> This token is necessary for the registration process. And you can retrieve your token on https://<your-gitlab-host>/admin/runners.

To make this work, remember that your Gilab server must be reachable by the Runner service and Executor server. So if you deploy your Runner at the same VPC where the Gitlab is, it’s easier to set up, but if not, guarantee that you have the proper configuration on the Security Group from the Gitlab to allow the traffic from the Runner.

Executing and deploying the Autoscaling Gitlab CI on AWS Fargate only takes 30 minutes or less after you change all parameters!

All Resources Needed for Autoscaling GitLab CI on AWS Fargate

After created the Cloud Formation stack, the AWS will create some resources:

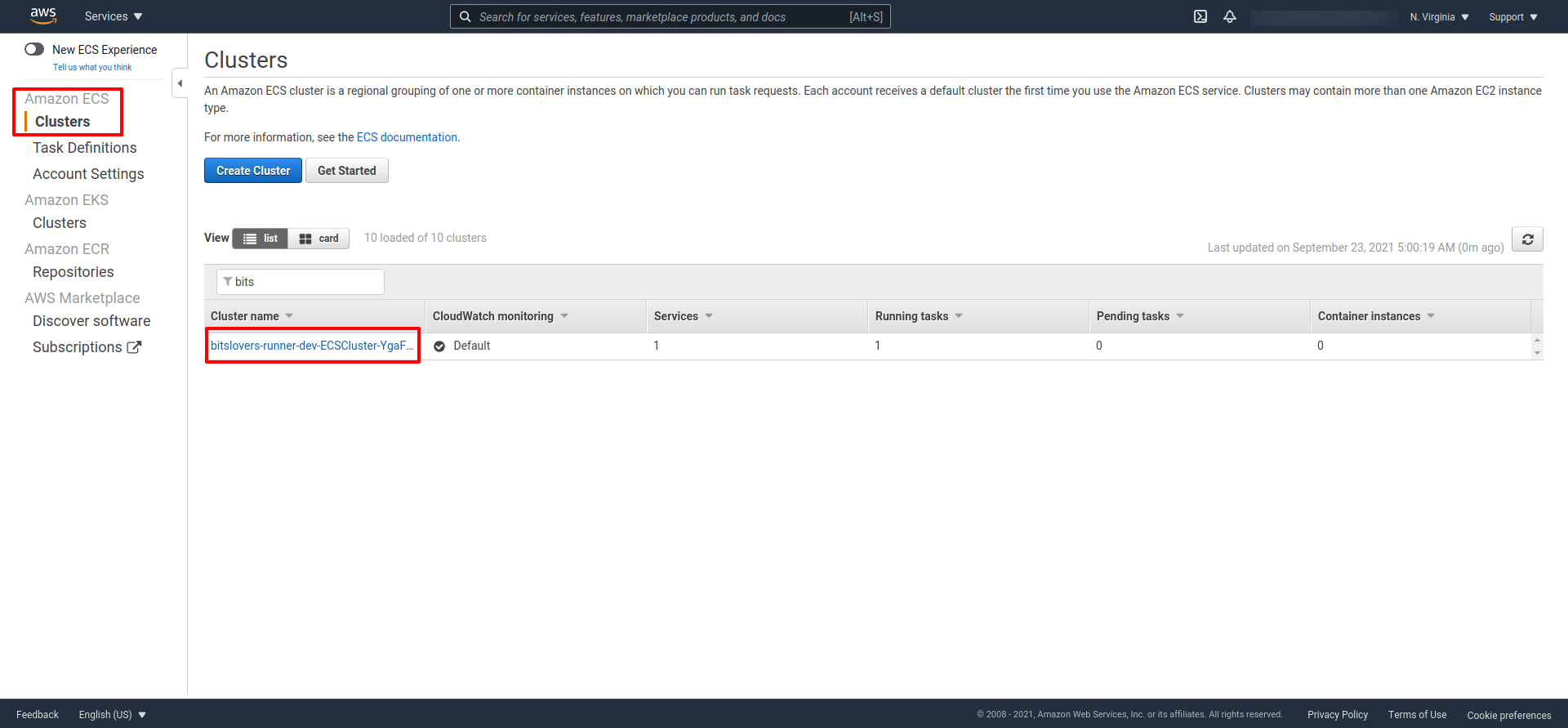

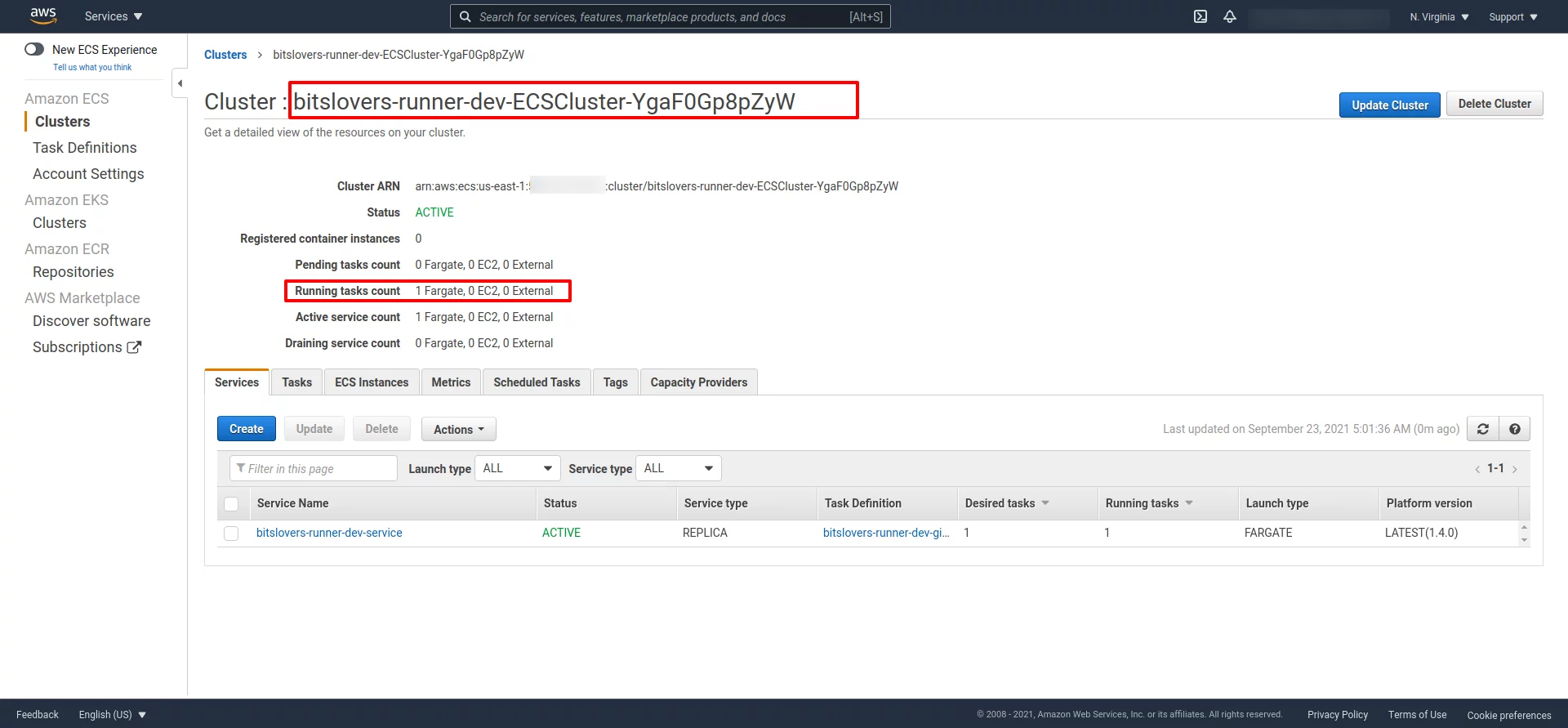

You should see the ECS Cluster:

And the ECS cluster should have similar details:

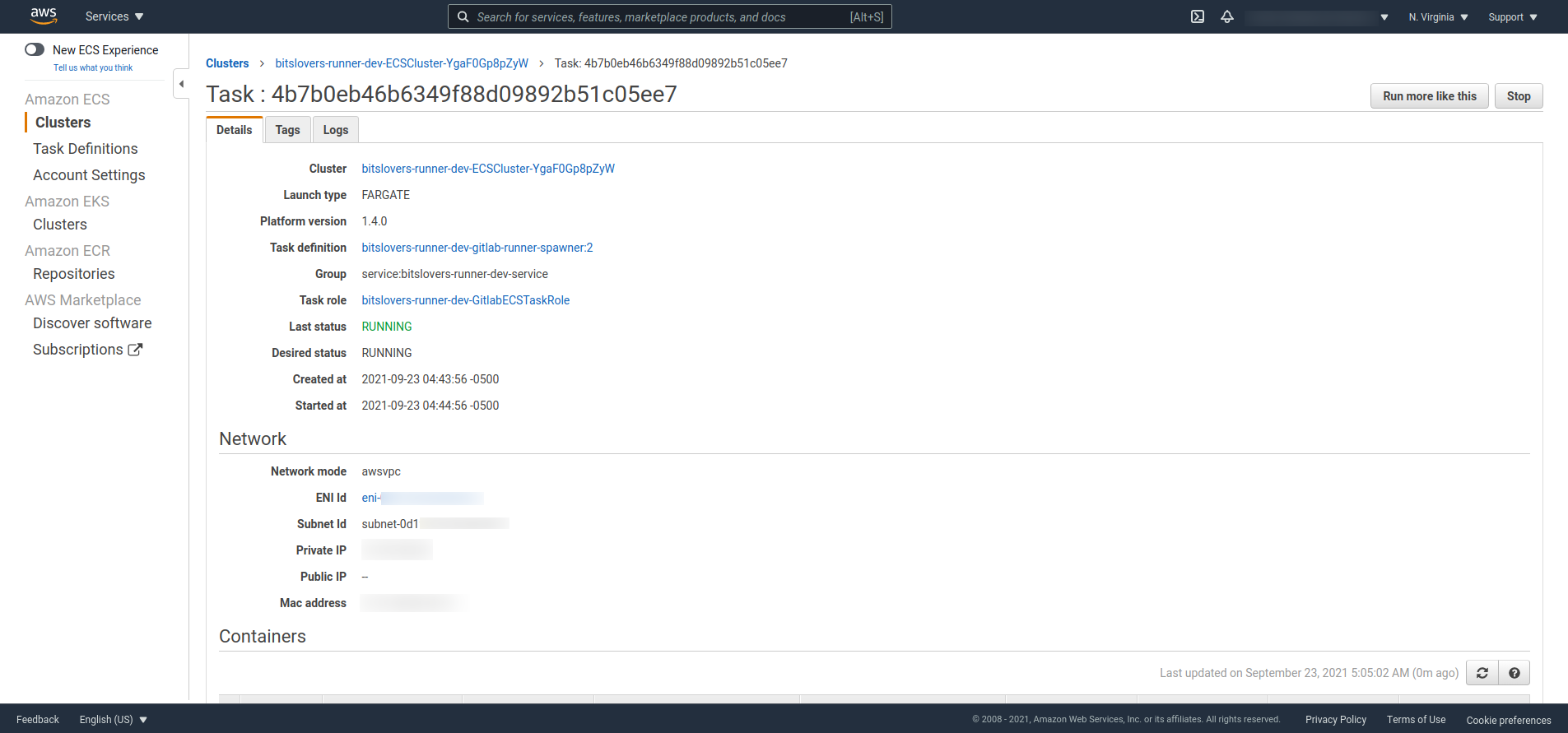

Later, double-check if you can see the Task and if the status of it. For example, it should display “Running.”

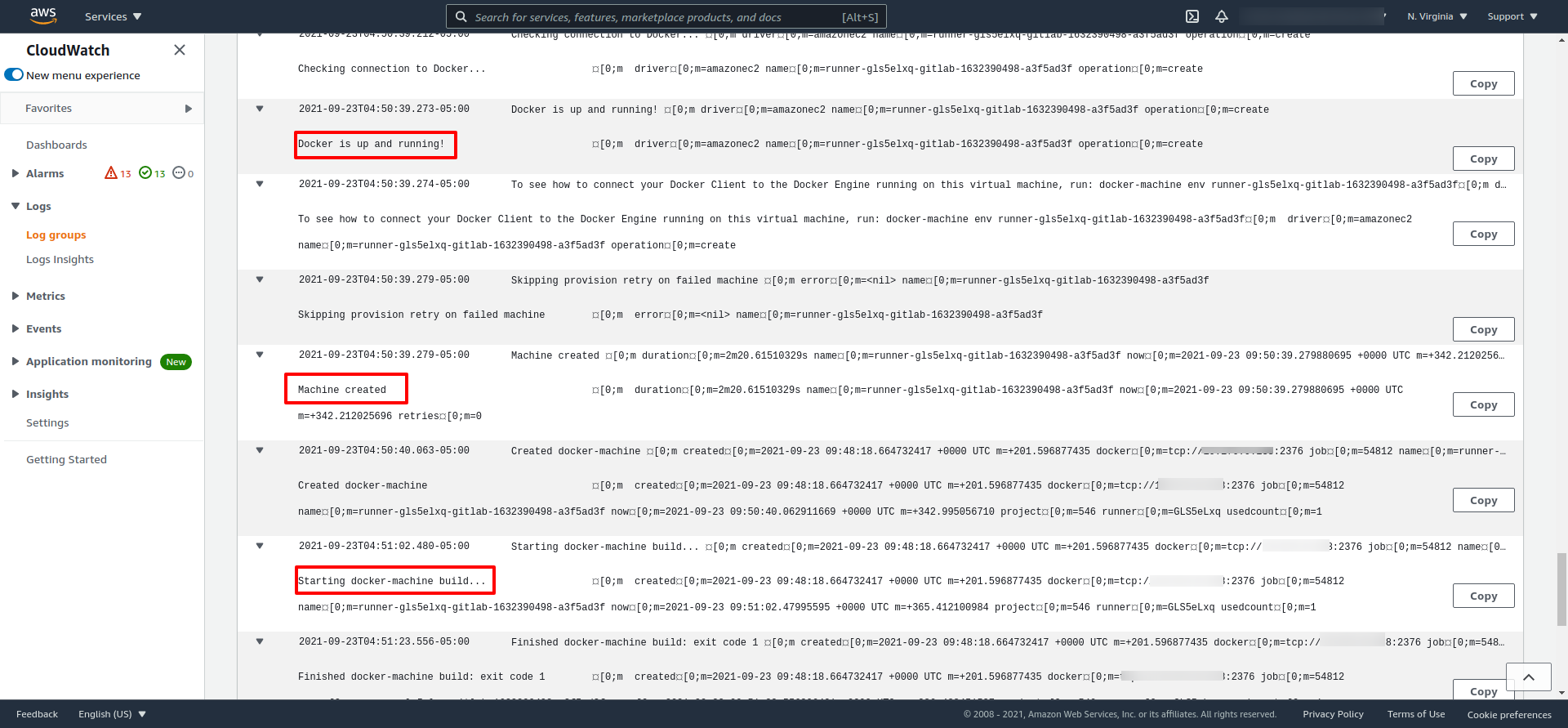

How to Troubleshoot the Runner on AWS Fargate

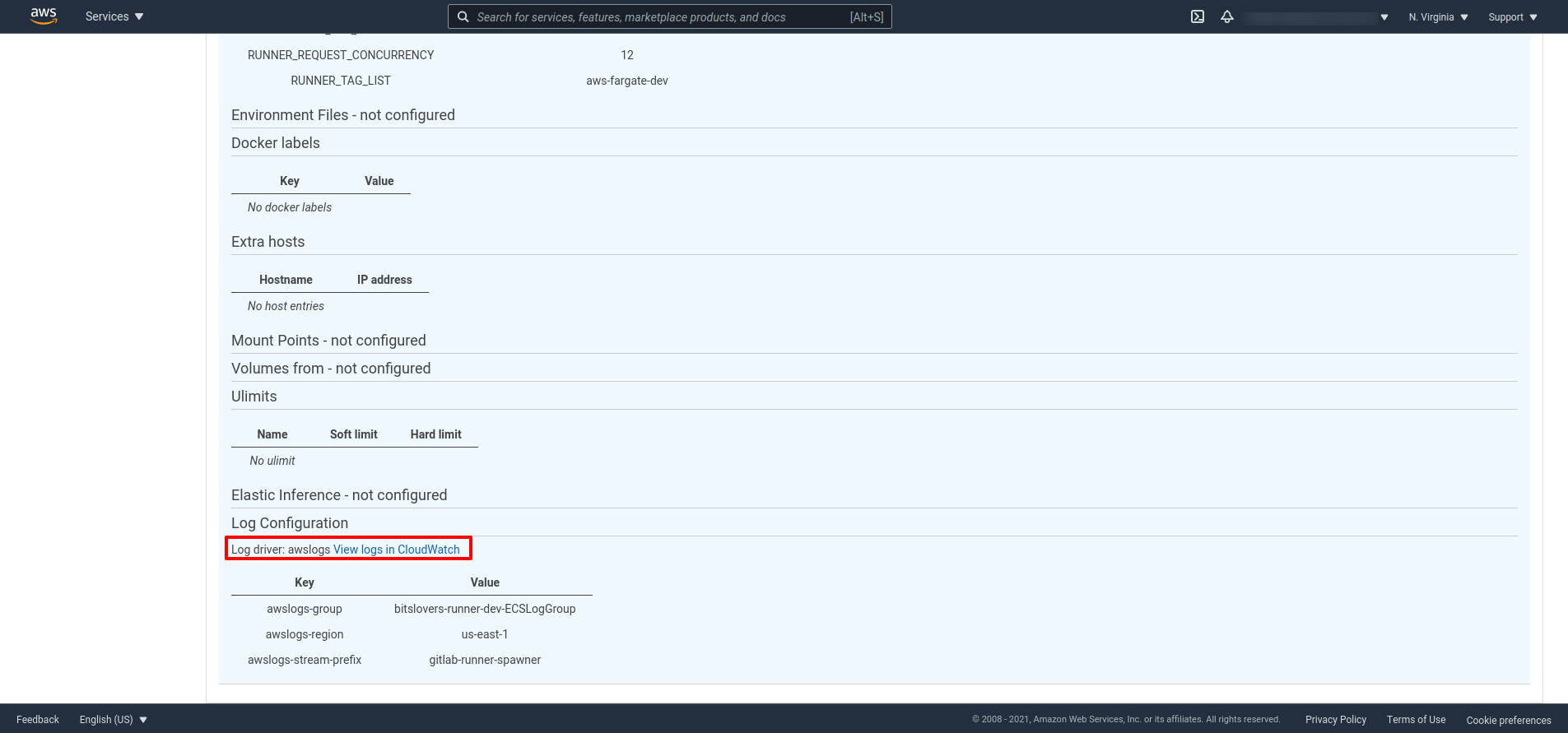

Also, it’s essential to know how to troubleshoot when you are facing some issue registering a Runner or if the Runner is falling to create the EC2 instance. To help us, the Cloud Formation creates a CloudWatch group that will help us access the logs regarding the registration process and lunch the EC2 instance (the Executor).

The easier way to discover the CloudWatch link, you can expand the Task detail and see the CloudWatch link, like the screenshot below:

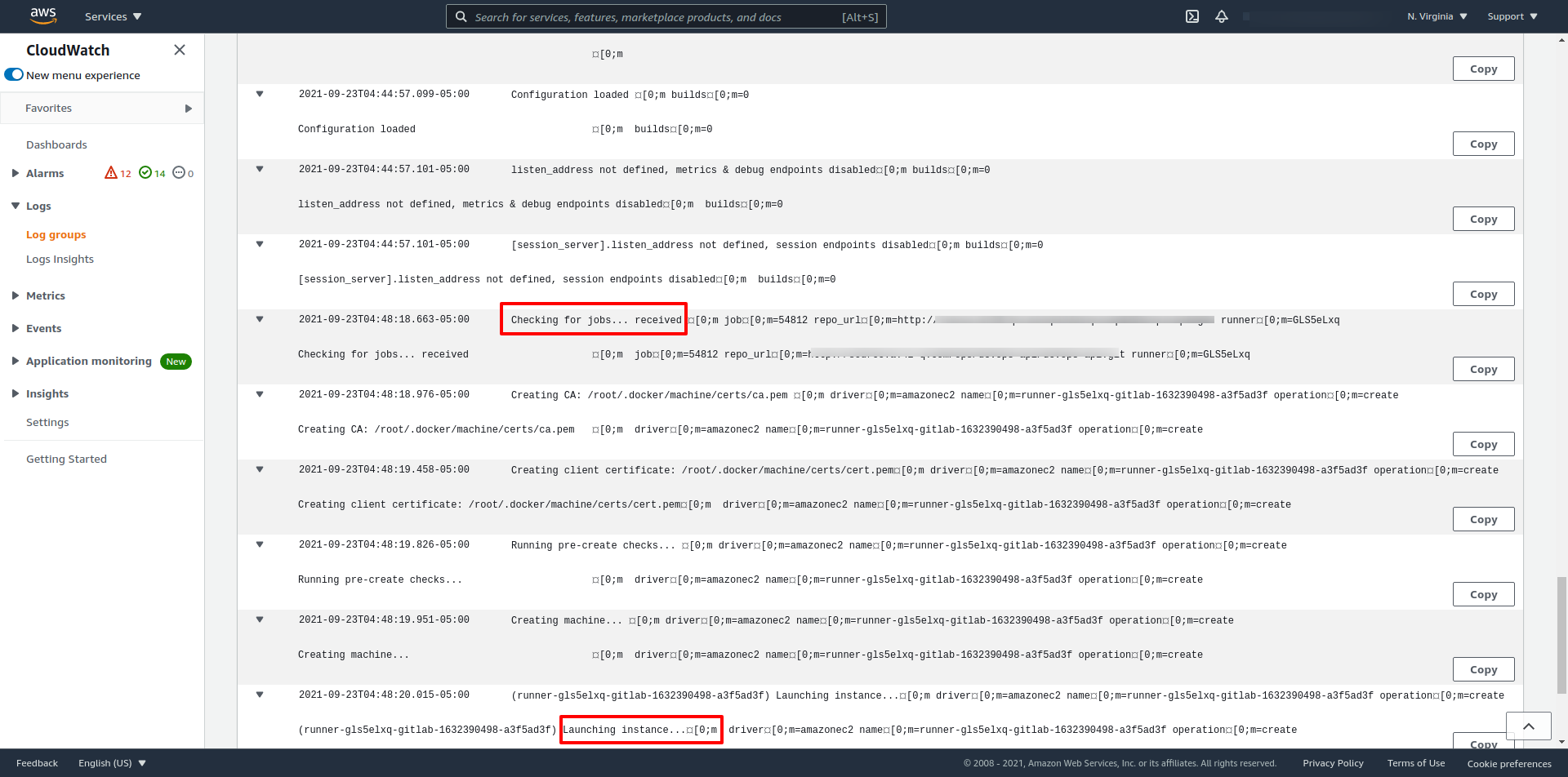

As an example see, what you should see on the log when the Runner receives a request from Gitlab to execute a Job:

Later, after creating the instance (Executer) and provisioning it, you can check when the Executor starts the pipeline effectively.

The Docker Image for the Runner on AWS Fargate

Also, we need to build one Docker image that will represent our Runner on Fargate.

You can find the Dockerfile that creates an Image, where we will run a container inside the Fargate on my GitHub account.

FROM ubuntu:20.04

LABEL maintainer="[email protected]"

# Install deps

RUN apt-get update && \

apt-get install -y --no-install-recommends \

ca-certificates \

curl \

git \

amazon-ecr-credential-helper \

dumb-init && \

# Decrease docker image size

rm -rf /var/lib/apt/lists/* && \

# Install Gitlab Runner

curl -LJO "https://gitlab-runner-downloads.s3.amazonaws.com/latest/deb/gitlab-runner_amd64.deb" && \

dpkg -i gitlab-runner_amd64.deb && \

# Install Docker Machine

curl -L https://gitlab-docker-machine-downloads.s3.amazonaws.com/v0.16.2-gitlab.11/docker-machine-Linux-x86_64 > /usr/local/bin/docker-machine && \

chmod +x /usr/local/bin/docker-machine

COPY ./entrypoint.sh ./entrypoint.sh

RUN mkdir -p /root/.docker/

COPY config.json /root/.docker/

ENV REGISTER_NON_INTERACTIVE=true

ENTRYPOINT ["/usr/bin/dumb-init", "--", "./entrypoint.sh" ]

On this Docker image, we are adding some dependencies required by the serverless Runner. They are:

Pull and Push Private Image from AWS ECR

Amazon ECR Credential Helper, this utility will help us use private images stored on the ECR. To pull images from a private ECR, we need to authenticate on the Registry using a Token that is valid for 12 hours, using the docker login process. Therefore, this utility makes our life easier to authenticate when our .gitlab-ci.yml specifies an image from a private ECR for a specific stage.

To use the Amazon ECR Credential Helper, you must store the config.json file on the current user that needs to authenticate. In our example, the Dockerfile uses the Root as the user, so we need to store that file on /root/.docker/.

The content of the config.json

{

"credsStore": "ecr-login"

}

Gitlab Runner

Gitlab Runner: The application (the binary gitlab-runner) configures the server as Runner and registers it on Gitlab. This application’s service runs on the server and establishes communication with the Gitlab server over an API.

Docker Machine: Docker Machine is a tool that allows you to install Docker Engine on virtual hosts and control the hosts with docker-machine commands. You can use Machine to build Docker hosts on any computer, on your business network, and also can be used on cloud providers like Azure, AWS, or DigitalOcean. Using docker-machine commands, you can also use the same commands like start, inspect, stop, restart a managed host, update the Docker client and daemon, and configure a Docker client to communicate with your host.

The most crucial topic about this Docker image is the entry-point, where we define how the Runner will register on Gitlab using the proper parameters that we have determined.

gitlab-runner register --executor docker+machine \

--docker-tlsverify \

--docker-volumes '/var/run/docker.sock:/var/run/docker.sock' \

--docker-pull-policy="if-not-present" \

--run-untagged="true" \

--machine-machine-driver "amazonec2" \

--machine-machine-name "gitlab-%s" \

--request-concurrency "$RUNNER_REQUEST_CONCURRENCY" \

--machine-machine-options amazonec2-use-private-address \

--machine-machine-options amazonec2-security-group="$AWS_SECURITY_GROUP" \

--machine-machine-options amazonec2-subnet-id="$AWS_SUBNET_ID" \

--machine-machine-options amazonec2-zone="$AWS_SUBNET_ZONE" \

--machine-machine-options amazonec2-vpc-id="$AWS_VPC_ID" \

--machine-machine-options amazonec2-iam-instance-profile="$RUNNER_IAM_PROFILE" \

"${RUNNER_TAG_LIST_OPT[@]}" \

"${ADDITIONAL_REGISTER_PARAMS_OPT[@]}"

Follow below some critical comments and details about the registration process:

How the Runner works inside the AWS Fargate Container

First, to make it clear, the Task that will run on the Fargate will be responsible for registering the Runner. And this serverless container will be waiting for Job from Gitlab.

The script that performs that registration is the “entrypoint.sh” from that container running on Fargate.

All Variables are defined and provided by the Cloud Formation Template. And their values are stored on the Fargate Task Definition once created.

The registration is slightly different when using the “–executor docker+machine” because we must specify the machine options that belong to the AWS driver from the Docker parameters.

All parameters for “machine-machine-options” are for the Executor server. The Runner will use those parameters to create the EC2 instances (the Executors) and then perform the pipeline inside it. The other parameter is for the registration from the Runner on Gitlab.

If you require more parameters to register your Runner on Gitlab, you can specify more parameters when performing the Cloud Formation template through the ADDITIONAL_REGISTER_PARAMS_OPT variable.

Important Notes about the Runner registration

On the registration process, we can also specify the tag for the Runner. Then, if you would like to lock it down for only projects that have defined that specific tag, the Runner will catch it to execute the pipeline. So, on the Cloud Formation, you can specify the tag or leave it blank.

If you don’t specify a tag, the script will automatically use “–run-untagged=true,” so it means that Gitlab will use (we call Shared Runner on Gitlab) your Runner to any project.

The variable AWS_SUBNET_ZONE, it’s just the letter from your availability zone. So, for example, if you plan to deploy the Runner on us-east-1a, then use the letter “a.”

What is the network configuration from your Gitlab?

If your GitLab server is not wide open to the internet, I recommend using a private subnet to deploy the Runner. Also, use a NAT IP to communicate with GitLab. However, it could be a problem if you use a public subnet for the Runner and Executor. Because each time the Runner creates the EC2 instance (Executor), this will get a new Public IP address, so it means that your Security Group from GitLab server will not allow your Executor to reach the Gitlab.

Also, you can use the Private IP from your GitLab as references for your Runner and Executors, if possible, to avoid some issues.

How to configure the concurrent limit for the Runner?

Also, note that the “sed” command replaces the “concurrent” value on the “entrypoint.sh” because this specific parameter is not available on the registration command, so we need to manipulate that value manually.

The following notes below it’s my experience that I had with that solution. I will try to give you great information, and you will be able to analyze and make your own decision, saving our time and avoiding some mistakes.

What I learned about: Autoscaling GitLab CI on AWS Fargate

Advantages

This solution is the best for building a heavy application that requires a big server. The Runner creates an “Executor” server on-demand only to build an application or execute any arduous task that we defined. So, we don’t need to leave this server running when we don’t need it, which could save a lot of money.

Also, we don’t need to worry about maintaining and patching the Runner server.

And because we create the Executor on demand, we can have several pipelines running simultaneously because each pipeline will run in different Executor without impacting other projects.

Also, You can define a limit of how many executors/servers (or build in parallel) the Runner will create to supply on high demand.

Disadvantages

Timing

For small projects, where the pipeline takes less than 3 minutes, this approach is not worth it if you carry about the timing for those projects.

I noticed that the Executor (EC2) takes 2.5 minutes to get ready and start to execute the pipeline. So, for small projects, it was a little annoying to wait for it daily.

Possible Solution

There is a workaround for this scenario. You can define idle time, that the server will be waiting to receive more Jobs from Gitlab. So, it’s up to you to decide how you will handle it.

Take advantage of the Runner Tags

For my projects, I decided to leave one Runner (a regular and small EC2), full-time running, to build projects that take less than 3 minutes. And I configured some Tags on my projects and Runner to correctly select the proper Runner for my projects.

In the same approach, you can specify a specific tag on your project to automatically pick up a Runner (using Fargate) that needs a big server to execute the pipeline. See our post on how to use Gitlab Tags for your Runner.

Cache Consideration on Runner in AWS Fargate

Another limitation about this Runner using Fargate is that we lose control over the cache on the Runner (in this case, the Executor). Because we always create a new Executor server, we lose all files from the previous pipeline. So if your project requires a good cache approach, this will be a little trick.

Example from a situation with Java Projects

For instance, a Java project needs to pull from a Nexus a lot of dependencies. In that scenario, it takes more time than usual because we kill the Executor. So, when we execute the build for the second time, the Executor will be a new server, so we need to pull the dependencies again from Nexus.

I want to highlight a feature that enables us to use S3 Bucket to store a cache from Runner. But, I didn’t use that because it looks confusing how it works. Also, I didn’t find precise information if the Runner version that I was using supported that feature. It seems that this feature is deprecated. So, I will not cover it here. But, if you know something about it, let me know.

Docker Volumes on Runnner with AWS Fargate

Also, because we have a Runner and Executor in a different context/server, it’s impossible to use Docker Volumes to share files if you need, as we had this possibility for a regular Runner that also executes the pipeline on it. So with that solution, we need to use an S3 bucket or any other approach.

Conclusion

Using the AWS Fargate to create the Gitlab Runner it’s a compelling approach to support heavy builds and a high user demand. So even with some negative points, it’s worth deploying it in your environment. And with a lower cost to keep the solution running for specific scenarios.

Check also the articles related to Gitlab Runner:

Effective Cache Management with Maven Projects on Gitlab.

Pipeline to build Docker in Docker on Gitlab.

How to use the Runner Tags the right way.

Please donate, and support our Blog to continue helping more people. Also, share this post on your social network. I was hoping you could leave me a message and follow me on the social network. I will be pleased!

Comments