Protect Passwords in the Cloud: Security will always be a concern for any company and any development or DevOps team responsible for creating and deploying applications. I will describe here a complete project in which I was involved in improving the security of our applications and platform. To make it easier, I will start by giving you an overview of the project, our goal, and some problems and requirements that we established.

Protect Passwords

Our first goal, and the main one, was to review all applications that we have been working on for years to improve the security of all authentication by removing all passwords hardcoded inside files and unprotected, like Shell Script, Java code, and anywhere.

It sounds easy if we think about one or two applications. But, in our case, we are talking about more than 50 applications, where we need to consider high availability and reliability.

Besides our goal to remove and protect all passwords stored in the wrong places, we need to automate the process and make standards for all applications because we have a lot of environments. We need to be able to create new environments quickly and always follow the same standard. In addition, it will make our lives easier to manage several credentials if we have a well-defined process to create them.

So, with the goal and requirements in mind. Here are the tools, services, and technologies that we are using to reach our goal:

How to secure your passwords from your application

So, regarding cloud providers, all our environment is inside of AWS. So, it makes a lot of sense to adopt the Secret Manager as our service to store our usernames and passwords.

Why Secret Manager?

The Secret Manager is a ready product that allows us to easily store any kind of sensitive data. The idea’s to use to store, for example, SSH keys, API credentials, or SSL certificates. Also, the Secret is deployed in different AZs, so it delivers high availability, that we must guarantee for our application.

How to automate and deploy our Secrets

This fantastic world of DevOps has several tools available to help us to automate any kind of process to the cloud. Here, you can use Cloud Formation or Terraform.

The solution for this article will be created utilizing Terraform as Infrasctrure as Code. Terraform has already been adopted by many companies, and the community is pretty strong. You can learn everything about Terraform here on Bits Lovers.

CI/CD for Deployment

After creating the Terraform, we needed to automate our process to deploy our solution quickly. We use GitLab as our CI/CD for most of our projects.

Suppose you work for a big company with many employees, such as in a big DevOps team. Then, it makes a lot of sense to automate deployments using GitLab. Once you have defined the workflow, no matter who will trigger the pipeline will always produce the same result. In addition, it means that we have one standard for all new environments, making them easier to maintain and faster to deploy.

A Chicken and Egg Situation – Password or Secret?

Now let’s think. We need to protect our passwords. But, at some point, we need to define them somehow or somewhere. This brings us the same problem that we had before. But right now, we are building a pipeline that will deploy for us, and we have centralized all passwords in a single place.

We need to create and deploy our passwords inside the Secret Manager to protect them. But, at the same time, we need to have some kind of script that automates this process for us and, at the same time, protects our password during this process.

All our scripts to deploy our secrets are controlled by our roles on GitLab. And we have all versioning to keep tracking the changes. However, anyone can read the files that contain the passwords. So, how can we protect our passwords within a Git repository?

Encryption

We carefully designed one solution that could help us protect our passwords before deploying them into Secret Manager. First, we configure our repository and the pipeline and create a separate file to store all passwords. And then, we encrypt that file using OpenSSL.

So, we never commit the decrypted file. Instead, every time we need to change or add new credentials, we decrypt the file and then make the necessary changes, encrypt the file again, and commit only the encrypted file.

Ok, you may be thinking. Hold on! To encrypt any file using OpenSSL, we need a private key! Where will you store it?

The aha moments

It looks like there is no way to avoid it. Do we always hit a wall? Of course, we realize that we must always store and protect something. For example, if we decide to use OpenSSL, how will our pipeline get the private key and decrypt our file? If we store the private key on Git, we lose our security.

Ok, relax. Here is the trick.

If we use the Secret Manager to store our private keys? And block our Secret using IAM Role to make sure that only our GitLab Runner will be able to retrieve that Secret. So, the pipeline script will download the private key from Secret Manager and then initiate the deployment for us, using the information found on the file that we decrypted to deploy other’s secrets.

Are you listing a Rock Roll? It sounds like music.

Alright, let’s stop talking. Let’s start doing something. Stretch your fingers, and here we go!

Make this process easier for everybody. Yes, we have uploaded our scripts to our official repository on GitHub!

Terraform for AWS Secret Manager

The Terraform script to deploy the Secrets on AWS Secret Manager is straightforward. But let’s give some input here.

After you check out the code from the GitHub, let’s analyze our solution:

We created a Terraform Module inside the project containing our code that describes the Secrets.

resource "aws_secretsmanager_secret" "secrets" {

for_each = var.SECRETS_LIST

name = "SM-${each.key}"

description = lookup(each.value, "description", null)

policy = var.policy-for-sm

recovery_window_in_days = 0

tags = {

Name = "SM-${each.key}"

Env = "Prod"

CreatedByTerraform = true

}

}

resource "aws_secretsmanager_secret_version" "sm-version" {

for_each = { for k, v in var.SECRETS_LIST : k => v }

secret_id = "SM-${each.key}"

secret_string = jsonencode(lookup(each.value, "secret_key_value", {}))

depends_on = [aws_secretsmanager_secret.secrets]

}

The code below expects a list of Secret (var.SECRETS_LIST). So we can easily append new secrets (objects).

So, our terraform.tfvars could follow the standard below:

SECRETS_LIST = {

db-blog = {

description = "Blog Credentials"

secret_key_value = {

username = "blog"

password = "blog"

}

},

db-app = {

description = "App Credentials"

secret_key_value = {

username = "app"

password = "app"

}

}

}

But remember that we can’t commit terraform.tfvars because it will contain all passwords. Our project has that file committed only to be easier for you to read that file.

Also, if you would like to create random passwords, you can check our article on how we can do it on Terraform.

Create Private Key for Encryption

With our terraform defined, and also our Secret definition in the terraform.tfvars, it’s time to encrypt that file.

Create a Private Key using OpenSSL

So, to create our private-key.pem using OpenSSL, follow the command below:

openssl req -x509 -nodes -newkey rsa:2048 -keyout private-key.pem -out public-key.pem

The command above will generate the private key (private-key.pem) and our public-key.pem.

We only use the private key to decrypt our file terraform.tfvars. And we use the public only to encrypt it again.

Create Secret Manager using Command Line

The private key should be protected, too, and should not be shared with anybody. For our solution, we will create a Secret using AWS Secret Manager. Then, we can either use the AWS Console or the command-line tool to make it.

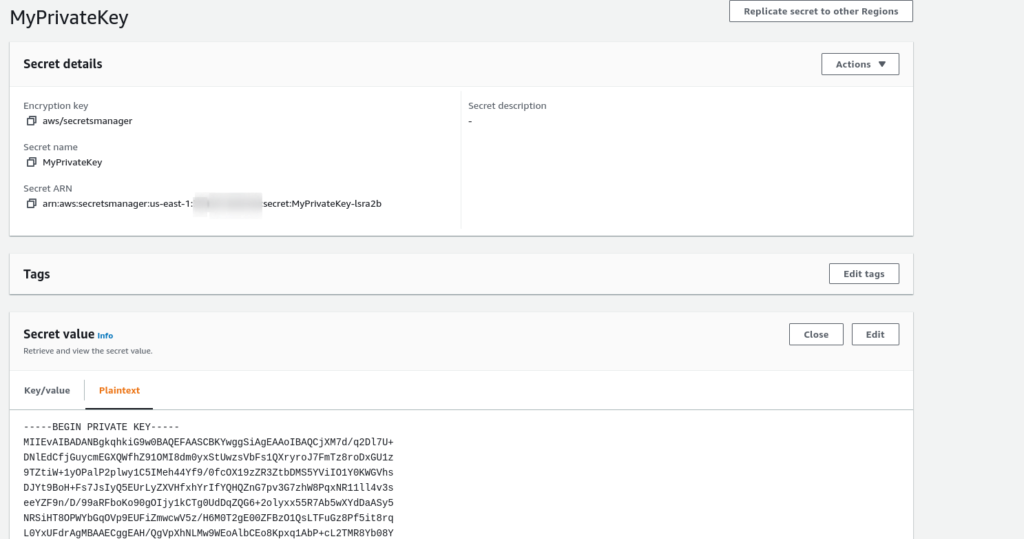

aws secretsmanager create-secret --name MyPrivateKey --secret-string file:///path/to/private-key.pem

After executing the command above, you will be able to see the Secret on AWS Console:

So, now we have a backup of our private-key.pem on Secret Manager. We don’t need to store it locally or save it in another place.

We can update or add our new secret definition on terraform.tfvars and then encryption our file to commit.

How to encrypt a file with our public key

openssl smime -encrypt -binary -aes-256-cbc -in terraform.tfvars -out terraform.tfvars.enc -outform DER public-key.pem

After the encryption, we are safe to delete and terraform.tfvars and commit the terraform.tfvars.enc. And our passwords will be protected in the cloud.

We also can commit the public key only. So, later we can encrypt the file quickly again.

How to decrypt using the Private Key

Let’s suppose we need to decrypt the file again and make new changes later. So, we will need to check out the project from Git, retrieve our private key from the Secret Manager and use it to decrypt our terraform.tfvars.enc.

Retrieve our private key programmatically using the AWS tool, calling Secret Manager API:

aws secretsmanager get-secret-value --secret-id MyPrivateKey | jq -r .SecretString > private-key.pem

The following command will decrypt the file terraform.tfvars.enc to the original name.

openssl smime -decrypt -in terraform.tfvars.enc -binary -inform DEM -inkey private-key.pem -out terraform.tfvars

Automation using Pipeline on GitLab for Secret Manager

Now, we need to build one pipeline that will trigger the following actions automatically:

1 – Build our container to hold all our dependencies to run the pipeline.

2 – Retrieve the Private Key from AWS Secret Manager

3 – Decrypt the file terraform.tfvars.enc using the private key.

4 – Call the subsequent command from Terraform: init, plan, apply.

Remember that all processes on GitLab run within the Runner. So, we need to allow the Runner server to reach the Secret Manager. And for security reasons, it’s a good idea to lock down access to the Secret MyPrivateKey, where we store our private key—allowing only the GitLab Runner to access it.

Example of Role

{

"Version" : "2012-10-17",

"Statement" : [ {

"Sid" : "EnableAllPermissions",

"Effect" : "Allow",

"Principal" : {

"AWS" : "arn:aws:iam::AWS_ACCOUNT_ID:role/runner-iam_profile"

},

"Action" : "secretsmanager:GetSecretValue",

"Resource" : "arn:aws:secretsmanager:us-east-1:AWS_ACCOUNT_ID:secret:runner-pUxvkd"

} ]

}

Example of gitlab-ci.yml

Let’s see how our complete solution should look inside our pipeline definition.

You can access our article about deploying Terraform code using GitLab to learn more.

The gitlab-ci.yml file:

variables:

AWS_REGION: us-east-1

AWS_DEFAULT_REGION: us-east-1

GIT_SSL_NO_VERIFY: "true"

PHASE: BUILD

stages:

- build_container

- plan

- deploy

build_container:

image: docker:latest

stage: build_container

script:

- docker build -t build-container .

Plan:

image:

name: build-container

entrypoint: [""]

stage: plan

artifacts:

paths:

- plan.data

expire_in: 1 week

script:

- aws secretsmanager get-secret-value --secret-id MyPrivateKey | jq -r .SecretString > private-key.pem

- openssl smime -decrypt -in terraform.tfvars.enc -binary -inform DEM -inkey private-key.pem -out terraform.tfvars

- terraform init

- terraform plan -input=false -out=plan.data

only:

variables:

- $PHASE == "BUILD"

Apply:

image:

name: build-container

entrypoint: [""]

when: manual

stage: deploy

script:

- aws secretsmanager get-secret-value --secret-id MyPrivateKey | jq -r .SecretString > private-key.pem

- openssl smime -decrypt -in terraform.tfvars.enc -binary -inform DEM -inkey private-key.pem -out terraform.tfvars

- terraform init

- terraform apply -auto-approve -input=false plan.data

only:

variables:

- $PHASE == "BUILD"

environment:

name: bitsprod

Destroy:

image:

name: build-container

entrypoint: [""]

stage: deploy

script:

- aws secretsmanager get-secret-value --secret-id MyPrivateKey | jq -r .SecretString > private-key.pem

- openssl smime -decrypt -in terraform.tfvars.enc -binary -inform DEM -inkey private-key.pem -out terraform.tfvars

- terraform init

- terraform destroy -auto-approve

only:

variables:

- $PHASE == "DESTROY"

environment:

name: bitsprod

action: stop

Inside that file, we defined all workflow needed to deploy our secrets using Secret Manager.

Note that we build a container containing all dependencies to run all other stages in the first stage of our pipeline. To learn more about creating docker containers using Gitlab.

Build Docker Container

Example of Dockerfile to build our pipeline:

FROM amazonlinux:2.0.20200722.0

LABEL MAINTAINER Bits Lovers

ENV TERRAFORM_VERSION 0.14.11

ENV AWSCLI_VERSION 1.19.52

ENV AWS_REGION us-east-1

# Run update and install dependencies

RUN yum update -y && \

yum install -y sudo jq python3 py-pip unzip git openssl

RUN pip3 install --upgrade pip setuptools awscli==${AWSCLI_VERSION}

ADD https://releases.hashicorp.com/terraform/${TERRAFORM_VERSION}/terraform_${TERRAFORM_VERSION}_linux_amd64.zip /usr/bin/

RUN mv /usr/local/bin/aws /usr/bin/

RUN chmod +x /usr/bin/aws

RUN unzip /usr/bin/terraform_${TERRAFORM_VERSION}_linux_amd64.zip -d /usr/bin && \

rm -f /usr/bin/terraform_${TERRAFORM_VERSION}_linux_amd64.zip

RUN aws configure set region ${AWS_REGION}

RUN terraform --version && \

aws --version

The Dockerfile contains the OpenSSL, Terraform, and other dependencies needed for our project.

Final Considerations

A critical note about security: if you have used Terraform for a while, you may know that all our configuration is stored in a Remote State called the Terraform State. If you are using S3 Bucket, or whatever the approach to use as Remote State, you need to guarantee also that nobody can read the state file because the file, by default, is not encrypted. So, for example, if you have an AWS account that many people have full access to on S3, you need to rethink how you will limit access to this file.

The best approach is to use one AWS account so that few people can access the S3. Or, if that is not your case, congratulations, you are doing a great job of segregating permission using the IAM Role.

I already see people encrypting the remote state. But, in my opinion, it’s too risky. For some reason, if we lose the remote state, we will be in big trouble. But it’s up to you.

Protect Passwords in the Cloud should always be something to stop and design carefully. I hope you enjoy reading this article, learn something new, or make your life easier. I will be happy to know that I have reached my goal. Thanks for being ready, and please, leave a comment or share it on your social media. It will help me a lot until the following article.