Hello, Bits Lovers. I am happy to see you again. Let’s continue to learn how to decouple our applications. This article will guide what Simple Queue Service (SQS) is. First, before we begin learning about the SQS service itself, we need to comprehend a significant idea, and that is: What is poll-based messaging? Later, we’ll analyze SQS and how does this serve our architecture? Then, we will learn some additional configurations that we must understand in depth. What different configurations do we have to adjust and create the SQS accurately? Then, we’ll look at a significantly deeper dive into one of those configurations, known as the visibility timeout, and how it controls the interchange and communication between our architecture and that messaging queue. And finally, we will give you some exam tips.

If you are planning to discover and learn the differences between AWS SNS vs SQS, you can take advantage of this post.

We explain FIFO and Dead-Letter Queue in the different articles to give you a clear understanding.

What is poll-based messaging?

We want to assume that poll-based messaging is a minor case. We might all be aware of that. For example, you would like to mail a letter with a message to a family member, so after writing the message on the letter, we put a stamp on it and hand it off to the post office.

Later, the post office takes our letter and delivery it to the final destination mailbox. Then, whenever your family member feels prepared, they can go to the mailbox, pick up that letter, and read it. That is actually what poll-based messaging means.

In this process, a producer writes the messages, for example, a web page (frontend), and takes a message in, writes said the letter message into an existing SQS queue, later then the backend server (the consumer) can reach retrieve that message from the SQS queue whenever it’s ready. So, we can consider SQS sort of like a postman putting a letter into the mailbox.

What is AWS SQS?

It’s a messaging queue that allows an asynchronous process of a job. First, what does means the term asynchronous? It’s a genuinely critical idea to comprehend and will work a little bit differently than perhaps how we’re employed to communicate with our server. In an earlier article, LINK, we learned the best practice to create one EC2 instance reaching a load balancer and then requesting directly from the ELB to the EC2 or another resource.

But what do we need to do if we need a direct connection? For example, to compose a message and send it to that SQS queue, and then a backend server can retrieve it whenever it’s ready? This approach doesn’t require a faster process to react to that message arriving from a load balancer if it’s not prepared to receive that content. So, it can connect to SQS and, obtain that data, recover that message whenever it’s prepared to read it. So, that’s the idea of asynchronous. It’s not straightforward communication. The SQS queue acts as a buffer.

SQS Settings

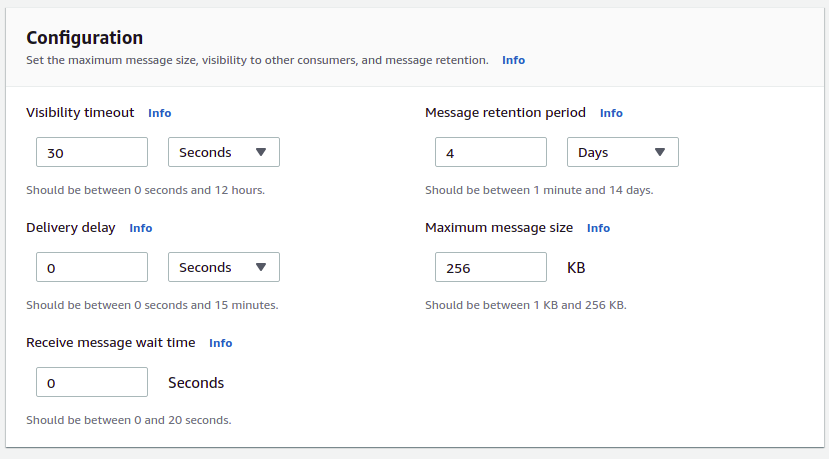

The SQS is an uncomplicated service, as the name suggests. However, there are many configurations that we must be aware of and guarantee that the queue is working correctly. Let’s one-by-one. But be aware that there are more configurations out of the list, but the ones mentioned below are enough if you plan to prepare for the AWS exam or start using SQS.

SQS Message size

There is a hard limit, and, by default, we can publish a message up to 256 kilobytes. Also, the message could be a text in any format. Therefore, we only need to pay attention to the limitation of 256 kilobytes for the whole message. It’s possible to configure that limit to a lower value than 256 but not higher.

SQS Message Retention Period

SQS Message Retention Period is a vital configuration that we need to understand before designing our solution. The SQS queue messages have a limited time to stay there. The default value for this configuration is four days. But we can change it up to 14 days, and the lower value is 60 seconds. So, when we reach the time, the messages are removed from the queue, which implies that if we continue processing messages out of the queue and the messages begin to delete, they will be lost if we don’t retrieve them.

SQS Delay Message

The SQS Delay Message. Zero is the default value for this configuration. However, we can change it up to 15 minutes.

What Delivery Delay does?

When we compose a message to the queue, and if that value (delay) is defined as more than zero, the queue will hide the message for a set duration of time that we’ve established before. It will show it if the backend server is requesting new messages.

But why would we want to delay messages consciously?

That is a good question, and to respond to that, let’s suppose we have that web server that is hosting our frontend that’s putting our items orders in our online store. A customer inputs their credit card details and address, so we later pass that information to the backend server. In this scenario, it may be helpful to delay one message sent to the customer to communicate that the system completed their order successfully until the backend server can confirm that credit card transaction. So, we can define a delay between when the message is displayed in the SQS queue and when that backend server can retrieve that message and perform operations against the customer order.

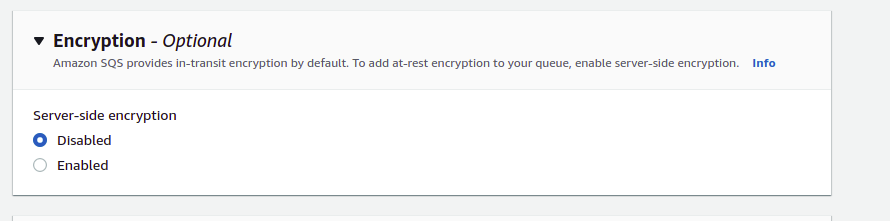

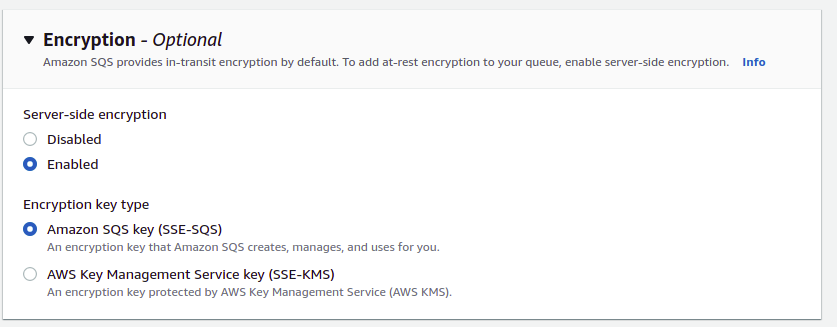

SQS Encryption

Encryption — The default value for at-rest encryption is false. However, with S3, the messages are encrypted in transit by default. To enable at-rest encryption to your queue, enable server-side encryption by clicking on the check box (see the screenshot below). Later, you need to select the KMS key.

The default option is disabled on server-side encryption:

If you select the server-side encryption:

SQS Visibility Timeout

First, let’s understand what that number denotes.

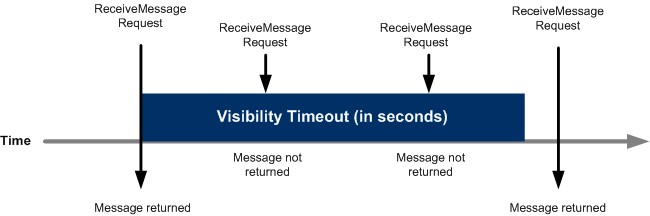

When a backend server retrieves and processes a message from a queue, the message stays in the queue. The SQS doesn’t automatically purge the message. And the reason that SQS is a distributed system. There’s no promise that the backend server really retrieved the message (for instance, it may run connectivity issues or an issue in the backend application). Therefore, the backend server must purge the message from the queue after reading and processing it.

Near instantly, after a message is retrieved, it stays in the queue. To avoid another backend server from processing the same message again, the SQS puts a visibility timeout, during which the SQS stops other servers from obtaining and processing the message. We can understand this mechanism as placing a lock in the message to avoid another consumer getting it. The default visibility timeout is 30 seconds, and the minimum and maximum are 0 seconds and 12 hours.

Let’s imagine configuring the visibility timeout to 60 seconds, and the backend server receives that message.

This message stays in the queue, but no one else can see it. So if a different server goes to the queue, the queue will say, “I don’t have new messages for you,” even though there is this locked message. However, if that backend server fails to process that message in 60 seconds, the message will show up again in the queue.

So, if our backend goes offline, we don’t lose the message. But, if our server, after 55 seconds through that visibility timeout of 60 seconds, the servers reaches out to the SQS queue to notify that it is done, the message is deleted from the queue, and we are fine.

SQS Long vs. Short

Regarding retrieving messages from the SQS queue, there are two approaches for that goal: Long and Short polling. The default is short polling.

Now, short polling means that the backend server connects and checks if there is any message on the queue. If the non-message is found, the backend server disconnects and keeps doing that until it finds messages to be processed.

But, that short polling approach has some downsides. First, it burns CPU cycles on the backend server. Second, we are expending money by performing all of those extra API calls (ReceiveMessage) because API calls to SQS have a cost.

How can we improve that approach? Short answer using long polling: we can configure by specifying the connection time range that we would like to perform the long polling.

Long polling enables us to connect and then wait for a while. This period is defined when our backend server performs a call to the API ReceiveMessage and sets a value greater than zero for the parameter WaitTimeSeconds. The highest long polling delay time is 20 seconds.

Long polling delivers the advantages:

- Retrieve messages as soon as they become available.

- Decrease false, empty replies by querying all instead of a subset of AWS SQS servers.

- Reduce empty responses by letting AWS SQS wait until a message arrives in a queue before dispatching a response. Unless that reaches the connection times out, the reaction to the ReceiveMessage request includes at least one message, up to the highest number of messages defined in the ReceiveMessage API call. Occasionally, you might obtain empty responses even when a queue still holds messages, mainly if we determine a low value for the ReceiveMessageWaitTimeSeconds.

Long polling s is not enabled by default, but we should concentrate on choosing answers for the exam that contains long polling. However, there are some unique scenarios where we can’t hold multi-threaded processes where short polling does make sense. But, the AWS exam, in general, gives preferences for long polling most of the time.

SQS Queue Depth

What is SQS queue depth? How do we scale our process? What happens if we receive many messages and we need to process them in time and not overload our environment, in this case, our backend server? We know that we can use CloudWatch and EC2 triggers metrics to look for CPU usage and then scale up or down. However, we may have another efficient approach or metric for our scenario: The SQS queue depth.

One integration between SQS and CloudWatch exists to show and examine metrics for our SQS queues. It means that we can use some metrics with Auto Scaling to create a new EC2 to process our queue.

Check for all metrics available for SQS on CloudWatch here.

Exam Tips

SQS will show up very often on the exam.

It’s essential to use all configurations we have learned in this article, for example, to understand the impacts and expected behavior after changing them.

Let’s go through more exam recommendations.

Understand the main configuration options

As we saw before, know all of the configurations. Unfortunately, we didn’t learn all of the required configurations for the exam in this article. Try to go through the AWS Console and navigate the process to create your queue, see all options available, and play with them.

Nothing stays permanently.

Recall that 14 days is the maximum.

How to troubleshoot common issues

You will be asked to troubleshoot. Why did we lose messages? Why do we have messages returning? Most of the issues are related to the wrong configuration. Perhaps the visibility timeout was set too low? For example, it is configured to 10 seconds, and the backend server has 30 seconds to process those messages, so the lock is released before it should be. Or another scenario, perhaps we’ve got that delivery delay defined when we shouldn’t have that.

Polling

Understand the difference between long and short polling. Long polling is cheaper and saves CPU cycles. And this advantage is what we need to focus on on the exam.

Size

Remember that the size of 256 kilobytes, and there is no limitation regarding the text format. It could be JSON, YAML, or any other format.

Your next homework

For the AWS exam, it recommends that you be prepared to answer questions and understand the SQS Dead-Letter Queue and What is the difference between FIFO and SQS Standard? Where we have an article covering a lot of topics about it.

Best Practices

1 – Uses VPC endpoints to access SQS for private access.

2 – Create IAM roles for other resources and services that require SQS access

3 – Double-check if the queues aren’t publicly available

4 – Adopt least-privilege access

5 – Enforce server-side encryption (it’s not enabled, you should enable it manually)

6 – Implement encryption of data in transit

Learn More

What is the advantage of using SQS FIFO?

Ho to use SQS Dead-Letter Queue?

What you should know about High Availability on AWS

Which AWS services help us to decouple our application?