AWS S3 Batch Operations

AWS S3 Batch Operations allow you to perform large-scale data processing jobs on Amazon S3. With this service, you can specify a series of actions to be performed on your data, and AWS will automatically handle the execution and scaling of those actions. This can be a huge time-saver for busy cloud engineers who need to process large amounts of data quickly and efficiently. In this blog post, we’ll take a closer look at how AWS S3 Batch Operations work and how you can use them to streamline your data processing workflow.

The S3 Batch Operations is just one of the several features AWS provides. Download and learn more with AW Learning Kit.

What is AWS S3 Batch Operations, and why would you use them?

AWS S3 Batch Operations allow you to manage large amounts of data efficiently and cost-effectively. Daily operations such as copy, trigger lambda, and change storage class help improve your application’s scalability. This ability makes it easier and more efficient for businesses to manage their cloud architectures.

Some typical usage cases:

-Copy objects -Set or change object storage classes -Trigger an Amazon S3 event -Run AWS Lambda functions -Encrypt/Decrypt objects using server-side encryption -Execute multiple operations in a single batch job -Provide access control to data stored on Amazon S3 -Manage object tags and object metadata -Apply object retention policies -Generate inventory reports for Amazon S3 buckets.

Integrated Services

The list below is the services that are integrated with Amazon S3 Batch Operations.

Amazon S3 Batch Operations is integrated with several essential AWS services that provide additional power and flexibility to your data processing jobs. These include Amazon Glacier, AWS Lambda, Amazon CloudWatch Events, Amazon SNS, and more.

–Amazon Glacier: Data stored in Amazon S3 can be archived in the lower-cost Amazon Glacier storage tier. This makes storing large amounts of data more accessible and cost-effective without sacrificing performance.

–AWS Lambda: AWS Lambda functions can be triggered from Amazon S3 Batch Operations jobs, allowing you to create sophisticated workflows that scale automatically.

–Amazon CloudWatch Events: Amazon CloudWatch Events can trigger Amazon S3 Batch Operations jobs. This allows businesses to quickly and efficiently respond to changes in their data sets without manual intervention.

–Amazon SNS: Amazon SNS notifications can be sent when an Amazon S3 Batch Operation job is completed or if an error occurs during its execution. This allows businesses to stay on top of their data processing jobs and ensure they are running as expected.

–Amazon SES: Amazon SES can be used to send email notifications when an Amazon S3 Batch Operation job is completed or in case of an error. This helps businesses quickly identify issues with their data processing jobs and respond accordingly.

–Amazon DynamoDB: DynamoDB can be a source and destination for the data processed by an Amazon S3 Batch Operations job. This allows businesses to quickly and easily move large amounts of data between storage tiers or locations.

These are just some of the many integrations available with AWS S3 Batch Operations. With this powerful tool, businesses can automate their data processing workflows and gain more insight into the performance of their cloud applications.

Some Features:

- Job Cloning: With the Amazon S3 batch process, you are able to effortlessly duplicate a current job and adjust its parameters before sending it off as an all-new task. These cloned tasks can reproduce failed jobs or make any other necessary revisions for existing ones.

- Programmatic Job Creation: To optimize your workflow, consider integrating a Lambda function into the bucket where your inventory reports are automatically generated. This way, as soon as a report arrives in the specific inventory folder, you can launch an automated batch job without waiting for human confirmation – it’s instant!

- Job Priorities: You can have several jobs running simultaneously in each AWS region, with higher priority jobs taking precedence. If you need to adjust a job’s execution, select the active job and click Update Priority.

- CSV Object Lists: Struggling to identify objects within an S3 bucket with no common prefix? Building a CSV file is the perfect solution! Start by generating an inventory report, then filter out desired items based on their name or reference. For example, if you’re utilizing Amazon Comprehend for sentiment analysis of your documents, use the generated inventory report to add any new unanalyzed documents into a CSV file. This way, you can easily and quickly build up your subset without manually searching through each item in the bucket!

Manifest object ETag

Manifest object ETag is a powerful feature of Amazon S3 Batch Operations that helps ensure data processing jobs’ integrity. The Manifest object ETag is an identifier used by Amazon S3 to track each file or folder in an S3 batch job and detect any changes made to them during the execution process. This helps you to avoid processing the same data twice or skipping data that has been updated since the last time it was processed.

Each file or folder is assigned a unique Manifest object ETag when running a batch job. The ETag is then used to check for any changes made to the file or folder during the execution of the job. If any changes are detected, the job will fail, and an error message will be thrown. This helps to make sure that only the most up-to-date version of a file or folder is processed, ensuring accuracy and consistency in your data processing jobs.

Overall, Manifest object ETag is an excellent tool for ensuring the integrity of your S3 batch jobs and is an invaluable asset for businesses that rely heavily on Amazon S3 Batch Operations.

Why would we use Amazon S3 Batch Operation instead of triggering a Lambda function directly from an S3 Bucket configured for a Prefix?

Amazon S3 Batch Operations allows for automated, large-scale operations on many objects in an S3 bucket at once. This is beneficial when many objects are in the bucket, and individually triggering Lambda functions would be resource-intensive and time-consuming. Additionally, Amazon S3 Batch Operations can easily integrate with other AWS Services, such as Amazon EMR and AWS Lambda, to perform multiple tasks in parallel. This allows for faster data processing since multiple tasks can be processed simultaneously rather than one at a time. By leveraging the automation capabilities of S3 Batch Operations, users can save money on compute costs associated with running individual Lambda functions per object in an S3 bucket. Furthermore, S3 Batch Operations can be configured to perform specific operations on objects in an S3 bucket based on certain criteria, such as object size or type. This makes it easier to manage large-scale data operations efficiently and cost-effectively.

How to set up an AWS S3 Batch Operation

Setting up an AWS S3 Batch Operation can be intimidating at first glance, but the process is relatively simple after creating a Role with appropriate IAM permissions. This Role should have access to upload, list, download and delete objects in the Amazon S3 bucket. Additionally, if you are working with a Cross Account setup, then you may need to enable access from another account. Once those steps have been completed, an AWS S3 Batch Operation can easily be set up and launched without too much additional preparation.

Amazon S3 Inventory and S3 Batch Operations

The S3 Inventory feature of Amazon Simple Storage Service (S3) is closely related to AWS S3 Batch Operations as it provides a reliable way to track, manage and audit the content stored in S3. It allows customers to generate individual inventory reports that list object metadata for all objects within a specified bucket or prefix. This can be useful for identifying duplicate objects and assessing the security of an S3 bucket. With S3 Inventory, customers have the ability to quickly identify things that need further attention by analyzing the object’s metadata in an easily readable CSV format.

With AWS S3 Batch Operations, customers can use this generated inventory (manifest) report as a source of inputs to perform a series of operations on all objects in the inventory.

Giving permissions

Before launching S3 Batch Operations, it is essential to grant suitable permissions. For example, suppose you want to carry out an Amazon S3 Batch Operation job. In that case, you must provide s3:CreateJob permission and allow iam:PassRole authorization for that individual in order to assign a particular AWS Identity and Access Management (IAM) role related to this specific job over to Batch Operations.

Before determining the ideal permissions for your operations, consider one of these policies to ensure smooth running. There is a policy that would fit perfectly with whatever type of operations you are managing:

- In any situation, Amazon S3 should possess the authority to access your manifest object from your bucket and potentially submit a report. Therefore, all policies specified below comprise these permissions.

- To manage your Amazon S3 Inventory report manifests efficiently, you must grant permission to S3 Batch Operations to read the manifest.json object and all associated CSV data files.

- When referencing the version ID of your objects, exclusive permissions such as s3:GetObjectVersion are necessary.

- To successfully run S3 Batch Operations on encrypted objects, you must provide IAM access to the AWS KMS keys associated with those same objects.

What are some of the most common use cases for AWS S3 Batch Operations?

AWS S3 Batch Operations is a powerful service that enables users to manage the large-scale operations of Amazon Simple Storage Service (S3) objects. It provides tools and automation needed for extracting, transforming, manipulating, and loading data stored in S3 buckets. Everyday use cases include copying and moving S3 objects from one bucket to another, batch encryption/decryption, file format conversion, and automated object tagging. As an added bonus, it supports copying, transforming, and replacing content between Amazon Glacier and S3 storage classes. By leveraging the advanced features available with AWS S3 Batch Operations, users can manage their data with more agility and efficiency at scale.

A Real Scenario: Batch-transcoding videos

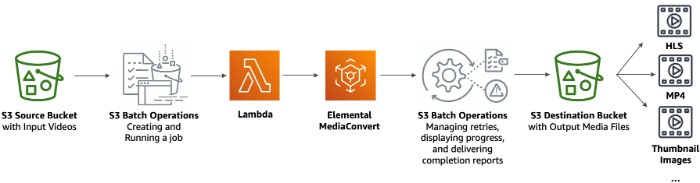

S3 Batch Operations have become a convenient way to invoke a Lambda function for large-scale transcoding of videos within an S3 source bucket. The integration between the Lambda function and MediaConvert allows for swift video transformations with unparalleled accuracy.

Media content creators will face a dilemma with an assortment of devices flooding the market, ranging from varying sizes and eras. To ensure their videos can be enjoyed across all screens, they must convert them into different formats with various bitrates – which could prove problematic if a large number needs converting.

If you want to store vast video libraries in Amazon S3, then AWS Elemental MediaConvert provides a great solution. With this service, it is possible to access Lambda functions for the existing videos stored in an S3 source bucket and transcode them into multiple file types according to your desired resolution, size, and format of the player or device. Once completed, all converted media can be stored inside an S3 destination bucket via S3 Batch Operations!

Here are some other examples to use in your Bussiness

1. Automated Archival System with S3 Batch Operations: Businesses that require large amounts of data to be stored for long periods of time can benefit from using AWS S3 Batch Operations to help set up an automated archiving system. With this system, the business would use S3 Batch Operations to create an archive structure in which files are transferred between different buckets and folders as they age or expire. This helps reduce storage costs while ensuring that important documents and files are kept safe and secure. Additionally, it makes it easier for administrators to find specific archived items quickly, as all of the files would be organized into a single hierarchical structure.

2. Automating Image Resizing Using S3 Batch Operations: Images are an essential part of modern websites, and high-resolution images can be pretty significant. It’s essential to have multiple versions of these images for different devices and screen sizes. Businesses can use S3 Batch Operations to set up an automated system that resizes images using Amazon Elastic Compute Cloud (EC2) instances or AWS Lambda serverless computing services when uploaded to the S3 bucket. This helps reduce storage costs by keeping only the necessary versions of each image instead of maintaining all versions on the server.

3. Automating Data Transformation for Big Data Analysis with S3 Batch Operations: For businesses dealing with vast amounts of data from multiple sources, it is often difficult to bring them together and process them in a way that makes it easy to analyze. With S3 Batch Operations, they can create an automated system that uses Amazon Simple Queue Service (SQS) queues to monitor incoming data sources continuously, transform the data into a standard format using AWS Glue or AWS Lambda serverless compute services, and then store the transformed data in S3 buckets for analysis with tools like Amazon Redshift or Amazon Athena. This helps automate the entire process, from collecting data from multiple sources to transforming it into usable formats for analysis.

These are just some of the scenarios where businesses can use S3 Batch Operations to help solve their problems with production systems. It is a powerful tool that allows companies to streamline their data management processes and save time and resources. With S3 Batch Operations, businesses can quickly and easily automate their production systems to increase efficiency and reduce costs.

How to get started with using AWS S3 Batch Operations in your own environment

Getting started with AWS S3 Batch Operations is simple and highly beneficial for any organization. To begin, familiarize yourself with the essential components of S3 Batch Operations and how they can be used to manage the life cycle of data in your environment. You will need to ensure that you have a secure, configured environment on which to run the AWS S3 Batch operations. Next, select and create an access control list (ACL) to control who has access and privileges when working with your data. You can securely execute batch operations across your entire environment with proper ACL setup without worrying about unauthorized access or potential security concerns. Lastly, monitor results to ensure that all commands are running correctly and that batch executions are going as expected. Following these steps should help you get up and running quickly using AWS S3 Batch Operations in your own environment.

Conclusion

Utilizing AWS S3 Batch Operations can drastically simplify the process of managing bulk data operations. With its many customizable options, you can tailor a solution to your own environment to maximize efficiency. However, routine monitoring of your batch jobs should be standard practice in order to ensure smooth and intuitive operation. With relatively simple setups and clear, intuitive configuration options, it’s no surprise that AWS S3 Batch Operations have become increasingly commonplace in cloud-focused architectures and enterprise solutions. Whether your use case revolves around performance maintenance, capacity optimization, or cost management, these oft-overlooked services offer value that should not be overlooked.

Understandably, it can seem rather daunting when first getting started with AWS S3 Batch Operations, so we recommend downloading our free AWS Learning Kit before diving deeper into implementation. With the tools at hand from our kit, you’ll be ready to take on any challenge related to batch operations head-on as soon as possible.

Learn more about AWS with efficiency using the AWS Learning Kit.